Monday, July 31, 2017

Rebecca Slayton can write and has a cool name

This is a really great paper you can read here. Highly recommend it for its much more in-depth analysis of how offense is probably expensive. At the end, she goes into a cost/benefit of both offense and defense of Stuxnet - which in my opinion is the weakest part of the paper. You can't say vulnerabilities cost 0 dollars if they came from internal sources. And you can't just "average" the two possibilities you have and come up with a guess for how much something was.

I mean, the whole paper suffers a bit from that: If you're not intimately familiar with putting together offensive programs, there are many many moving pieces you don't account for in your spreadsheet. That's something you learn when running any business really. On the other hand, she's not on Twitter, so maybe she DOES have experience in fedworld and just doesn't want to go into depth?

Also, there's no discussion of opportunity costs. And a delay of three months on releasing a product, equally true for web applications and nuclear bombs, can be infinitely expensive.

But aside from that, this is the kind of work the policy world needs to start doing. Five stars, would read again.

I mean, the simpler way of saying this is the NSA mantra, which is that whoever knows the network better, controls it. And defenders obviously have a home-field advantage... :)

Quotas as a Cyber Norm

|

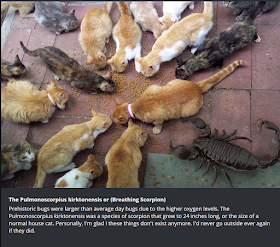

| Originally I chose this picture as a way of illustrating perspective around different problems we have. But now I want a giant scorpion pet! So win-win! |

Part of the security community's issue with the VEP is that it addresses a tiny problem that Microsoft has blown all out of proportion for no reason, and distracts attention from the really major and direct policy problems in cyber namely:

- Backdoored supply chains

- National Security Letters (and their cousin the All Writs Act as the FBI interprets it)

- Trojaned Cryptographic Standards

Vulnerabilities have many natural limits - like giant scorpions needing oxygen. If nothing else, it costs money to find them and test and exploit them, even assuming they are infinite in supply, which I assure you they are not. Likewise, vulnerabilities can be mitigated by a company with a good software development practice - there is a way for them to handle that kind of risk. A backdoored cryptographic standard or supply chain attack cannot be mitigated, other than by investing a lot of money in tamper proof bags, which is probably an unreasonable thing to ask Cisco to do.

Deep down, forcing the bureaucracy to prioritize on actions that have no "cost" to them but high risk for an American company makes a lot more sense than something like the VEP, which imposes a prioritization calculus on something that is already high cost to the government.

Essentially what I'm asking for here is this: Limit the number of times a year we intercept a package from a vendor for backdooring. Maybe even publish that number? We could do this only for certain countries, perhaps? There are so many options, and all of them are better than "We do whatever we want to the supply chain and let the US companies bear those risks."

Likewise, do we have a policy on asking US companies to send backdoored updates to customers? Is it "Whenever it's important as we secretly define it?"

Imagine if China said, "Look, we backdoor two Huawei routers a year for intelligence purposes." Is that an OK norm to set?

Friday, July 28, 2017

A partial retraction of the Belfer paper

https://www.lawfareblog.com/what-you-see-what-you-get-revisions-our-paper-estimating-vulnerability-rediscovery

As a scientist, I had the same reaction to the Belfer VEP paper that a climate scientist would when confronting a new "science" paper telling me the Earth was going into an ice age. So you can imagine my "surprise" when it got partially retracted a few days after being published in response to me having a few spare hours the afternoon the day after it was originally released.

Look, on the face of it, this paper's remaining numbers cannot be right. There are going to be obvious confounding variables and glaring statistical flaws in any version of this document that claims 15% of all bugs collide between two independent bug finders within the conditions this paper uses. They haven't released the new paper and data yet, so we will have to wait to find out what they are. But if you're in the mood for a Michael Bay movie-style trailer, I will say this: The answer is fuzzers, fuzzers, and more fuzzers. A better title for this paper would have been "Modern fuzzers are very complex and have a weird effect on bug tracking systems and also we have some unsubstantiated opinions on the VEP".

The only way to study these sorts of things is to get truly intimate with the data. This requires YEARS OF WORK reading reports daily about vulnerabilities and also experience writing and using exploits. Nobody in policy-land with a political science or international relations background wants to hear that. It sounds like something a total jerk would say. I get that and for sure Ari Schwartz gets that. But also I get that this is not a simple field where we can label a few things with a pocket-sized methodology and expect real data, the way this paper tried to.

An example of how not being connected to the bugs goes horribly wrong has been published on this blog previously, when a different Befler "researcher" (Mailyn Fiddler) had to correct her post on Stuxnet and bug collisions TWICE IN A ROW because she didn't have any of the understanding of the bugs themselves necessary to know when her thesis was obviously wrong.

As in the case of the current paper, she eventually claimed her "conclusion didn't change" just because the data changed drastically. That's a telling statement that divides evidence-based policy creation from ideological nonsense. Just as an ideological point of reference, Bruce Schneier, one of the current paper's authors, also was one of the people to work with the Guardian on the Snowden Archive.

The perfect paper on bug collision would probably find that the issue is multi-dimensional, and hardly a linear question of a "percentage". And any real effort to determine how this policy works against our real adversaries would be TOP SECRET CODEWORD at a minimum.

|

| "Did not ever look at the data - hope the other two data sources are ok?" |

As a scientist, I had the same reaction to the Belfer VEP paper that a climate scientist would when confronting a new "science" paper telling me the Earth was going into an ice age. So you can imagine my "surprise" when it got partially retracted a few days after being published in response to me having a few spare hours the afternoon the day after it was originally released.

Look, on the face of it, this paper's remaining numbers cannot be right. There are going to be obvious confounding variables and glaring statistical flaws in any version of this document that claims 15% of all bugs collide between two independent bug finders within the conditions this paper uses. They haven't released the new paper and data yet, so we will have to wait to find out what they are. But if you're in the mood for a Michael Bay movie-style trailer, I will say this: The answer is fuzzers, fuzzers, and more fuzzers. A better title for this paper would have been "Modern fuzzers are very complex and have a weird effect on bug tracking systems and also we have some unsubstantiated opinions on the VEP".

The only way to study these sorts of things is to get truly intimate with the data. This requires YEARS OF WORK reading reports daily about vulnerabilities and also experience writing and using exploits. Nobody in policy-land with a political science or international relations background wants to hear that. It sounds like something a total jerk would say. I get that and for sure Ari Schwartz gets that. But also I get that this is not a simple field where we can label a few things with a pocket-sized methodology and expect real data, the way this paper tried to.

An example of how not being connected to the bugs goes horribly wrong has been published on this blog previously, when a different Befler "researcher" (Mailyn Fiddler) had to correct her post on Stuxnet and bug collisions TWICE IN A ROW because she didn't have any of the understanding of the bugs themselves necessary to know when her thesis was obviously wrong.

As in the case of the current paper, she eventually claimed her "conclusion didn't change" just because the data changed drastically. That's a telling statement that divides evidence-based policy creation from ideological nonsense. Just as an ideological point of reference, Bruce Schneier, one of the current paper's authors, also was one of the people to work with the Guardian on the Snowden Archive.

The perfect paper on bug collision would probably find that the issue is multi-dimensional, and hardly a linear question of a "percentage". And any real effort to determine how this policy works against our real adversaries would be TOP SECRET CODEWORD at a minimum.

Friday, July 21, 2017

Something is very wrong with the Belfer bug rediscovery paper

This is what the paper says about its Chromium data:

The problem with this methodology is simply that "merges" do not indicate rediscovery in the database. The vast majority of the findings relied upon for the paper are false positives.

To look at this, I went through their spreadsheet and collected out those mentioned 468 records. Then I examined them on the Chromium bug tracking system. The vast majority of them were "self-duplicates" from the automated fuzzing and crash detection systems.

Looking at just the ones that have a CVE or got a reward makes more sense. There's probably only 45 true positives in this data set (i.e. the ones with CVE numbers). That's 1.3% which agrees with the numbers from the much cleaner OpenSSL Bug Database (2.4%) from this paper.

---

Notes:

Example false positives in their data set:

Chrome: The Chrome dataset is scraped from bugs collected for Chromium, an open source software project whose code constitutes most the Chrome browser. 20 On top of Chromium, Google adds a few additional features, such as a PDF viewer, but there is substantial overlap, so we treat this as essentially identical to Chrome.21 Chrome presented a similar problem to Firefox, so to record only vulnerabilities with a reasonable likelihood of public discovery, we limited our collection to bugs labeled as high or critical severity from the Chromium bug tracker. This portion of the dataset comprises 3,397 vulnerability records of which there are 468 records with duplicates. For Chrome, we coded a vulnerability record as a duplicate if it had been merged with another, where merges were noted in the comments associated with each vulnerability record, or marked as a Duplicate in the Status field.

The problem with this methodology is simply that "merges" do not indicate rediscovery in the database. The vast majority of the findings relied upon for the paper are false positives.

To look at this, I went through their spreadsheet and collected out those mentioned 468 records. Then I examined them on the Chromium bug tracking system. The vast majority of them were "self-duplicates" from the automated fuzzing and crash detection systems.

|

| I'm a Unix hacker so I converted it to CSV and wrote a Python script to look at the data. Happy to share scripts/data. |

Looking at just the ones that have a CVE or got a reward makes more sense. There's probably only 45 true positives in this data set (i.e. the ones with CVE numbers). That's 1.3% which agrees with the numbers from the much cleaner OpenSSL Bug Database (2.4%) from this paper.

---

Notes:

Example false positives in their data set:

- This one has a CVE, but doesn't appear to be a true positive other than people noticed things crashed in many different ways from one root cause.

- Here someone at Google manually found something that clusterfuzz also found.

- Here is another clear false positive. Here's another. Literally I just take any one of them, and then look at it.

- Interesting one from Skylined, but also a false positive I think.

Thursday, July 20, 2017

Decoding Kasperksy

http://fortune.com/2017/07/16/kaspersky-russia-us/

Although various internet blowhards are hard at work asking for "More information to be released" regarding why the US is throwing Kaspersky under the bus, that's never going to happen. It's honestly easiest to get in the press by pretending to be in disbelief as to what the United States is doing in situations like this.

I say pretend because, it's really pretty clear what the US is saying. They are saying, through leaks and not-so-subtle hints, that Kasperksy was involved in Russian operations. It's not about "being close to the Kremlin" or historical ties between Eugene Kaspersky and the FSB or some kind of DDoS prevention software. Those are not actionable in the way this has been messaged at the highest levels. It's not some sort of nebulous "Russian Software" risk. It's about a line being crossed operationally.

The only question is whether you believe Eugene Kaspersky, who denies anything untoward, or the US Intelligence Community, which has used its strongest language and spokespeople as part of this effort and has no plans to release evidence.

And, in this particular case, the UK intel team (which has no doubt seen the evidence) is backing the US up, which is worth noting, and they are doing it in their customary subtle but unmistakable way, by saying at no point was Kaspersky software ever certified by their NCSC.

The question for security consultants, such as Immunity, is how do we advise our US-based clients - and looking at the evidence, you would have to advise them to stop using Kaspersky software. Perhaps your clients are better off with VenusTech?

|

| I'm pretty sure this AV company is deceased! |

Bugmusment 2017

The Paper itself:

Commentary:

|

| Note that the paper in the selected area would be TS/SCI for both us and China. :) |

|

| (To be honest, I don't think it even does this) |

- https://twitter.com/daveaitel/status/888031853273329664

- https://twitter.com/daveaitel/status/888046303892168704

- https://twitter.com/daveaitel/status/888045737006825472

- https://twitter.com/daveaitel/status/888042663164903427

|

| Cannot be true. |

Ok, so I can see how it went. After the Rand paper on 0day collisions came out, existing paper writers in the process of trying to point out how evil it was the Government knew about 0day were a bit up a creek without a paddle or even a boat of any kind.

Because here's the thing: The Rand paper's data agrees with every vulnerability researcher's "gut feeling" on 0day collision. You won't take a 5% over a year number to a penetration testing company and have them say, "NO WAY THAT IS MUCH TOO LOW!"

But if you were to take a 20% number to them, they would probably think something was wrong with your data. Which is exactly what I thought.

So I went to the data! Because UNLIKE the Rand paper, you can check out their GitHub, which is how all science should work. The only problem is, when you dig into the data, it does not say what the paper says it does!

Here is the data! https://github.com/mase-gh/Vulnerability-Rediscovery

From what I can tell, the Chromium data is from fuzzers, which naturally collide a lot. Especially when in most cases I can click on the rediscovery is from the exact same fuzzer, just hitting the same bug over and over in slightly different ways. The Android data I examined manually had almost all collisions from various libstagefright media parsing bugs, which are from fuzzers. A few seemed to be errors. In some cases, a CVE covers more than one bug, which makes it LOOK like they are collisions when they are not. This is a CVE issue more than anything else, but it skews the results significantly.

Ok, so to sum up:

The data I've looked at manually does not look like it supports the paper. This kind of research is hard specifically because manual analysis of this level of data is time consuming and requires subject matter experts.

It would be worth going in depth into the leaked exploits from ShadowBrokers etc. to see if they support any of the figures used in any of the papers on these subjects. I mean, it's hard not to note that Bruce Scheier has access to the Snowden files. Maybe there are some statistics about exploits in there that the rest of us haven't seen and he's trying to hint at?

|

| This was the paragraph in the paper that worried me the most. There is NO ABILITY TO SCIENTIFICALLY HAVE ANY LEVEL OF PRECISION AS CLAIMED HERE. |

Wednesday, July 19, 2017

An important note about 0days

https://twitter.com/ErrataRob/status/886942470113808384

For some reason the idea that patches == exploits and therefor any VEP-like program that releases patches is basically also trickling out exploits is hard to understand if you haven't done it.

Also, here's a very useful quick note from the head of Project Zero:

For some reason the idea that patches == exploits and therefor any VEP-like program that releases patches is basically also trickling out exploits is hard to understand if you haven't done it.

Also, here's a very useful quick note from the head of Project Zero:

Issues with "Indiscriminate Attacks" in the Cyber Domain

c.f.: https://www.cyberscoop.com/petya-malmare-war-crime-tallinn-manual/

The fundamental nature of targeting in the cyber domain is very different from conventional military standards. In particular, with enough recon, you can say to a high degree "Even though I released a worm that will destroy every computer it touches, I don't think it will kill anyone or cause permanent loss of function for vital infrastructure."

For example, if I have SIGINT captures that say that the major hospitals have decent backup and recovery plans, and the country itself has put their power companies on notice to be able to handle computer failures, I may have an understanding of my worm's projected effects that nobody else does or can.

Clearly another historical exception is if my destructive payload is only applicable to certain very specific SCADA configurations. Yes, there are going to be some companies that interact poorly with my exploits and rootkit, and will have some temporary damage. But we've all decided that even a worm that wipes every computer is not "destroying vital infrastructure" unless it is targeted specifically at vital infrastructure and in a way that causes permanent damage. Sony Pictures and Saudi Aramco do still exist, after all, and they are not "hardened targets".

The main issue is this: You cannot know, from the worm or public information, what my targeting information has told me and you cannot even begin to ask until you understand the code. Analyzing Stuxnet took MONTHS OF HARD WORK. And almost certainly, this analysis was only successful because of leprechaun-like luck, and there are still many parts of it which are not well understood.

So combine both an inability to determine after-the-fact if a worm or other tool was released with a minimal chance for death or injury because you don't know my targeting parameters with the technical difficulty of examining my code itself for "intent" to put International Law frameworks on a Tokyo-level shaky foundation. Of course, the added complication is that all of cyber goes over civilian infrastructure - which moots that angle as a differentiating legal analysis.

In other words, we cannot say that NotPetya was an "indiscriminate weapon".

The fundamental nature of targeting in the cyber domain is very different from conventional military standards. In particular, with enough recon, you can say to a high degree "Even though I released a worm that will destroy every computer it touches, I don't think it will kill anyone or cause permanent loss of function for vital infrastructure."

For example, if I have SIGINT captures that say that the major hospitals have decent backup and recovery plans, and the country itself has put their power companies on notice to be able to handle computer failures, I may have an understanding of my worm's projected effects that nobody else does or can.

Clearly another historical exception is if my destructive payload is only applicable to certain very specific SCADA configurations. Yes, there are going to be some companies that interact poorly with my exploits and rootkit, and will have some temporary damage. But we've all decided that even a worm that wipes every computer is not "destroying vital infrastructure" unless it is targeted specifically at vital infrastructure and in a way that causes permanent damage. Sony Pictures and Saudi Aramco do still exist, after all, and they are not "hardened targets".

The main issue is this: You cannot know, from the worm or public information, what my targeting information has told me and you cannot even begin to ask until you understand the code. Analyzing Stuxnet took MONTHS OF HARD WORK. And almost certainly, this analysis was only successful because of leprechaun-like luck, and there are still many parts of it which are not well understood.

So combine both an inability to determine after-the-fact if a worm or other tool was released with a minimal chance for death or injury because you don't know my targeting parameters with the technical difficulty of examining my code itself for "intent" to put International Law frameworks on a Tokyo-level shaky foundation. Of course, the added complication is that all of cyber goes over civilian infrastructure - which moots that angle as a differentiating legal analysis.

Many of the big governmental processes try to find a way to attach "intent" to code, and fall on their face. The Wassenaar Arrangement's cyber regs is one of them. In general, this is a problem International Law and Policy students will say is in every domain, but in Cyber, it's a dominant disruptive force.

Monday, July 17, 2017

The Multi-Stakeholder Approach

I was struck listening to this policy panel as it weaved and dodged to avoid really confronting the hard questions it raised.

|

| Asking for lexicons is weak sauce. |

|

| True enough. |

|

| Jane Holl Lute never has anything interesting to say on Cyber. Here's a review of her BlackHat Keynote: click. It's pretty telling about the policy community that she still gets on these panels. |

Ok, here are some of the hard questions that got left on the cutting room floor:

What can we do about having the information security community not trust the USG when it comes to what we say?

What are the values of the information security community, and what would it mean to respect them in a multi-stakeholder environment on the Internet?

What are we going to do if we can't detangle all these issues?

Wednesday, July 12, 2017

What Kaspersky Means for Cyber Policy

Context: https://www.bloomberg.com/news/articles/2017-07-11/kaspersky-lab-has-been-working-with-russian-intelligence

Kaspersky has officially and unofficially denied any wrongdoing of any kind. But on the other hand, the recent actions by the US Government have not been subtle. The question is whether you believe McCain and Rubio and the IC over Eugene Kaspersky. It is clear from public reports that there is damning, but classified evidence which the US has no intention of releasing.

And there will be impact from the ban: While it's true that government agencies are "free" to still buy Kaspersky products, its unlikely any agency will do so, other than as a migration plan onto a GSA approved product.

If you've been to any US conference recently you've seen the sad sad Huawei booth, run by a "reseller" who would just as soon have the Huawei name removed from his equipment lines and unread brochures. This is what awaits Kaspersky in the US market, and there does not seem to be a way to fight it.

While this action only directly affects US Agencies (further bans may follow in legislation), it would be difficult to be a US Bank (aka, Critical Infrastructure) and continue using their software, and this could have widespread repercussions (as almost all banks are tightly connected and that is a huge market to lose for Kaspersky). Likewise, cyber insurance plans may require migrating off Kaspersky as a "known risk".

Examining what Kaspersky could have done to generate this reaction, you also have to note there are no mitigating factors available for recourse. The offer of looking at the source code means nothing since Kaspersky's AV is by definition a self-updating rootkit. So let's go over the kinds of things it could have been:

The USG has not said what Kaspersky did that was so bad. What we've said is one clear thing: There is a line. Don't cross it.

As most of my friends say: It's about time.

Kaspersky has officially and unofficially denied any wrongdoing of any kind. But on the other hand, the recent actions by the US Government have not been subtle. The question is whether you believe McCain and Rubio and the IC over Eugene Kaspersky. It is clear from public reports that there is damning, but classified evidence which the US has no intention of releasing.

And there will be impact from the ban: While it's true that government agencies are "free" to still buy Kaspersky products, its unlikely any agency will do so, other than as a migration plan onto a GSA approved product.

If you've been to any US conference recently you've seen the sad sad Huawei booth, run by a "reseller" who would just as soon have the Huawei name removed from his equipment lines and unread brochures. This is what awaits Kaspersky in the US market, and there does not seem to be a way to fight it.

While this action only directly affects US Agencies (further bans may follow in legislation), it would be difficult to be a US Bank (aka, Critical Infrastructure) and continue using their software, and this could have widespread repercussions (as almost all banks are tightly connected and that is a huge market to lose for Kaspersky). Likewise, cyber insurance plans may require migrating off Kaspersky as a "known risk".

Examining what Kaspersky could have done to generate this reaction, you also have to note there are no mitigating factors available for recourse. The offer of looking at the source code means nothing since Kaspersky's AV is by definition a self-updating rootkit. So let's go over the kinds of things it could have been:

- Hack Back assistance (aka, "Active Countermeasures", as hinted at in the Bloomberg Report)

- HUMINT cooperation (i.e. especially at their yearly Security Analyst Conference)

- Influence operations (aka, ThreatPost, which is an interesting side venture for an AV)

The USG has not said what Kaspersky did that was so bad. What we've said is one clear thing: There is a line. Don't cross it.

As most of my friends say: It's about time.

Monday, July 10, 2017

Mass Surveillance and Targeted Hacking are the Same Thing

So I recommend you give the Morgan MARQUIS-BOIRE T2 Keynote a watch. It takes him a while to really get started but he gets rolling about 20 minutes in.

I think there is one really good quick note from the talk: Targeted and Mass Surveillance are the same thing. This is annoying, because ideologically the entire community wants to draw lines around one or the other. I.E. Targeted Good, Mass Bad.

But the reason you do targeted is to enable mass, and the reason you do mass is to enable targeted. And both are conjoined with "Software Backdoors implanted in the supply chain" in a way that is inextricable. Those of us in the 90's hacker scene used to say that the best way to crack a password was to just grep through your lists of that person's passwords. I.E We always had a million things hacked that we maintained the way a cartoon rabbit maintains their carrot patch, and that's how we did targeted attacks.

So whatever policy decisions you're going to make have to take this into account, and I think that's where the truly hard part starts.

Sunday, July 9, 2017

MAP(Distributed Systems Are Scary)

|

| Everything in this paragraph is wrong in an interesting way but we're going to focus on the DNS thing today. |

So I went back over the Lawfareblog post on the GGE failure and I wanted to point something very specific out: The Global Domain Name System is not something we should save. Also, the last thing we want the GGE doing is negotiating on the "proliferation of cyber tools", but thankfully that is a story for another day (never).

When I went to university for Computer Science I only had to have a B average to keep my NSA scholarship. And RPI didn't have any notion of prerequisites other than a giant pile of cash in the form of tuition, which the NSA was paying for me. So Freshman year I started signing up for random grad-level classes. These have a different grading system: A (You did ok) B (You understand it but your labs might not have worked at all). C (You didn't do well at all)

The only grad-level class I got a C in was Computer Security.

One of the advanced classes I signed up for was parallel programming, which back then was done on IBM RS6000s running a special C compiler and some sort of mainframe timeshare architecture. They'd done a ton of effort to make it seem like you were programming in normal C with just a special macro API, but memory accesses could sometimes be network calls and every program was really running on a thousand cores and you couldn't really predict when things would happen and because the compiler chain was "Next Gen" (aka, buggy as shit) you didn't get useful error messages - just like programming on Google's API today!

It was an early weird machine. You either got it or you didn't. And a lot of the other students refused to let go of the idea that you could control the order of everything. In their end, their programs, which looked right, just didn't work or worked as slow as a dead moose, and they had no way to figure out why. They had learned to program in C, but they had never learned to program.

Last Thursday I was in DC at a trendy bar with some people who have a lot more experience at policy. And one of them (who I will only name as a "Senior Government Official", because he is, and because being called that will annoy him) at one point exasperatedly said "We should just ban taking money out of Bitcoin." He may have said "We should ban putting money INTO bitcoin." I can't remember. The cocktails at this place are so good the bartenders wear overalls and fake black-rimmed eyeglasses. I was pretty toasted, in other words, so I didn't press him on this, even though I should have.

I've been to a ton of policy meetings, both in the US and abroad, where high level government officials have wanted a "driver's license for the Internet" or "Let's ban exploit trade" or "Let's just ban bitcoin". These are all the kind of ideas that result from not understanding the weird distributed machine that is Internet Society.

Yes, as a protocol DNS is a rotten eyeball on the end of a stick poked deep into a lake full of hungry piranhas. But the correct solution is to MOVE AWAY FROM DNS. It is not to try to get everyone to agree not to attack it.

DNS is not important in any real sense. If it went away, we could create another IP to name system that would work fine, and be more secure and not have, say, Unicode issues, and scalability issues and literally every other issue. We don't move away simply because governments (and the companies that run DNS) love DNS. They love it centrally controlled and they love how much money they can make selling it and managing it.

Nobody technical would have suggested this brain-dead idea of agreeing not to attack DNS. What's next, no attacking FTP servers? All ICMP packets must be faithfully transmitted! They would have then sent around the "evil bit" RFC as a laugh and moved on with their lives.

I'll admit to not being at the meeting, and not knowing the details of the proposal. But I'm confident it was the kind of silly every technical person in this business would have stopped if they had the chance. This says something else about why the GGE failed...

Friday, July 7, 2017

Reflections on the GGE "failure"

Surprise

Despite years of discussion and study, some participants continue to contend that is it premature to make such a determination and, in fact, seem to want to walk back progress made in previous GGE reports. I am coming to the unfortunate conclusion that those who are unwilling to affirm the applicability of these international legal rules and principles believe their States are free to act in or through cyberspace to achieve their political ends with no limits or constraints on their actions. That is a dangerous and unsupportable view, and it is one that I unequivocally reject. - Michele G. Markoff, Deputy Coordinator for Cyber Issues

The key thing to understand about the State Department team negotiating this is they appear to be SURPRISED that things fell apart. But it was entirely predictable, at least to the five people who read this blog.

The factors that weigh like a millstone around the neck of our cyber diplomacy efforts, including our efforts in the UN, and NATO, and bilaterally, are all quite loud.

Internal Incohesion

The United States and every other country have many competing views internally and no way of solving any of the equities issues. The "Kaspersky" example is the most recent example of this. Assume Kaspersky did something equally bad as Huawei and ZTE - but something you can't prove without killing a source, which you are unwilling to do. Likewise, are we willing to say that whatever we are accusing Kasperksy of, in secret, we don't do ourselves?

The Kaspersky dilemma continues even beyond that: We can either fail to act on whatever they did, which means we have no deterrence on anything ever (our current position), or we can unilaterally act without public justification, which acknowledges a completely balkanized internet forever.

As many people have pointed out, information security rules that governments enforce (i.e. no crypto we can't crack, we must see your source code, etc.) are essentially massively powerful trade barriers.

On every issue, we, and every other country, are split.

Misunderstanding around the Role of Non-Nation-States

Google and Microsoft have been most vocal about needing a new position when it comes to how technology companies are treated. But Twitter is also engaged in a lawsuit against the US Government. And the entire information security community is still extremely hostile to any implementation of the Wassenaar Arrangements cyber tools agenda (negotiated by Michele Markoff, I think!).

The big danger is this: When the information security community and big companies are resisting government efforts in one area, it poisons all other areas of communication. We are trying to drag these companies and their associated technical community along a road like a recalcitrant horse and we are surprised we are not making headway. It means when we have our IC make claims about attribution of cyber attacks, it is met with a standard of disbelief.

Likewise, we still, for whatever reason, feel we have an edge when it comes to many areas where we (the USG) do not. Google attributed the WannaCry attacks to NK weeks before the IC was able to publish their document on it. DHS's "indicators of compromise" on recent malware (including the Russian DNC malware) has been amateur-grade.

On many of these issues, nation-states are no longer speaking with a voice of authority and we have failed to recognize this.

The equities issues are also ruinous, and we have yet to have a public policy on even the most obvious and easy ones:

- Yes/No/Sometimes: The United States should be able to go to a small US-based accounting software firm and say "We would like you to attach the following trojan to your next software update for this customer".

- Yes/No/Sometimes: The US should interdict a shipment of Cisco routers to add hardware to it.

So many articles full of hyperbole have been written about the exploits the ShadowBrokers stole from the USG (allegedly) that even when we get the equities issues right, it looks wrong. Microsoft is without any reasonable argument on vulnerabilities equities, but that doesn't mean every part of every company's threat model has to include the US Government.

Attempt at "Large Principle Agreement" without Understanding the Tech

The aim of modern cyber norms is to be able to literally codify your agreements. Cyber decisions get made by autonomous code and need to be stated in that level of clarity. What this means is that if the standard database of "IP"->"Country" mapping (Maxminds) says you are in Iran, then you are in Iran!

If you try to do what the GGE did, you get exactly what happened at GGE - people are happy to agree on a large sweeping statement but only one where they define every word in it differently than you do and then later take it back.

If you try to do what the GGE did, you get exactly what happened at GGE - people are happy to agree on a large sweeping statement but only one where they define every word in it differently than you do and then later take it back.

You will often see claims of "Let's not attack critical infrastructure" and "Let's not attack CERTs" are examples of norms - but imagine how you would code those in real life! You can't! The Tallinn documents are also full of nonsensical items like "Cyber boobie traps" and other ports from previous domains which cannot be represented as code, and hence are obviously not going to stand up over time.

What this points to is that we should be building our cyber norms process out of technical standards, with a thin layer of policy, not massive policy documents with a technology afterthought.

Summary

If you and your wife disagree on the definition of the term "cheating" then you're both happy to agree that cheating is bad. But if you then later go on to try to define the term in such a way that it doesn't apply to cigar related events and in a sense gerrymanders your activities as "OK" retroactively, your wife is going to pull out of the whole agreement and it's not confusing or surprising why. That's what happened at the GGE and in all of our cyber norms efforts.

Wednesday, July 5, 2017

It Was Always Worms (in my heart!)

A New Age

1990s-2001: Worms (Code Red, etc.)2002: Bill Gates Trustworthy Computing Email

2005-2015: Botnets and "APT" and Phishing <--THE ANOMALY DECADE.

2016: Advanced Persistent Worms! Worms everywhere! Internet of Worms!

2018: Defensive worms as policy teams catch up, in my optimistic worldview.

I'll be honest: Stuxnet and Flame and Duqu and the other tools (built during the anomaly decade) were worms, at their heart. All top-line nation-state tools are capable of autonomous operation. This means the state of play on the internet can change rapidly, with changing intent, rather than a massive five year wait as people do development and testing on new toolchains.

But all our defenses and policy regulations and laws and language have been built around botnets. The very idea of what attribution really means, or Wassenaar's cyber controls, or how we handle vulnerability disclosure as a nation-state are just some examples of this. But in the long run, both defense and offense will be using forms of self-replicant programs we don't have any kind of conceptual legal language to describe.

Part of the problem is that computer worms are not worms. Technically they are more like social insects building complex covert networks and distributed data structures on stolen computation. Worms are connected creatures - they are literally a tube from mouth to anus! Worms have a brain for command and control. This is how Metasploit works, basically.

Ants don't have and don't need a C2 and are much closer in terms of a model for what we will see on computer networks.

Trendlines

The key thing to realize is this: Smaller players are inevitably getting into the cyberwar game. That means more worms. Why? Because the less resources you have, the better a worm fits your strategic equation. If you're trying to replicate the QUANTUM infrastructure, but you're Finland, you are crazy. This applies equally to small countries, and to non-nation-state players.Likewise, the better defenses are at catching intrusions, the more worms you're going to have. Right now, vulnerabilities never ever get caught. But modern defenses have changed that. Here's Microsoft catching an A-grade team they call PLATINUM. Kaspersky also has been excellent at catching non-Russian A-Grade teams! :)

Exploits are going to start getting caught, which means they will be created and used very differently from the last ten years. Our policy teams are not ready for this change. Worms are an attacker's answers to the race of getting something done before getting caught by endpoint defenses and advanced analytics. And counter-worms are the obvious defensive answer for cleaning up unmanaged systems.

Fallacy: Some Bugs are Wormable vs Some Are Not Wormable

I'd like to take a moment to talk about this, because the policy world has this conception that some bugs are wormable, and should therefor go through the VEP process for disclosure, and other bugs are not, and are therefor less dangerous. It's super wrong. All bugs can be part of a worm. Modern worms are cross platform and can use XSS vulns and buffer overflows and logic bugs and stolen passwords and timing attacks all at once and get their information from all sorts of sources and spit out versions of themselves that have only some of their logic and auto-remove themselves from machines they don't need and frankly, act more and more like ants.But many policy proposals try to draw a line between different kinds of vulnerabilities that is not there in practice by saying "These vulnerabilities are safe to have and use, and these are not". No such line exists in practice.

Policy Implications of the New Age of Worms

There are so many. I just want people to admit this is the age we are in and that our policy teams have all been trained on the Age of APT, which is now over. Let's start there. :)

Monday, July 3, 2017

Export Control, AI, and Ice Giants

If you look at "Export Control" in the face of the Internet, the change in our societies and nation-states, and the rise of 3d Printing, you have to ask yourself: Is this still a thing?

Most of the policy world turns up its nose at the idea that control regimes can fail at all. Their mantra is that "it worked for nuclear, it'll work for anything". That's the "Frost Giants" argument applied to control regimes in the sense of "We did export control, and we're not dead, so this stuff must work!"

And I haven't read a ton of papers or books recently who have even admitted that control regimes CAN FAIL. But recently I ordered a sample from a 3d printer of their new carbon fiber technique. They will send it to you for free and if you've only experienced the consumer 3d printer technology (which is basically a glue gun controlled by a computer) then it's worth doing.

The newer 3d printers can create basically anything. Guns are the obvious thing (and are mostly made of plastic anyways), but any kind of machined parts are clearly next. What does that mean for trademarks and copyrights, which are themselves a complex world-spanning control regime? Are we about to get "Napstered" in physical goods? Or rather, not "if" but "how soon" on that. Did it already happen and we didn't notice?

If we're going to look at failures, then missile control is an obvious one. This podcast (click here now!) from Arms Control Wonk (which is a GREAT policy podcast) demonstrates a few things towards the end:

Most of the policy world turns up its nose at the idea that control regimes can fail at all. Their mantra is that "it worked for nuclear, it'll work for anything". That's the "Frost Giants" argument applied to control regimes in the sense of "We did export control, and we're not dead, so this stuff must work!"

And I haven't read a ton of papers or books recently who have even admitted that control regimes CAN FAIL. But recently I ordered a sample from a 3d printer of their new carbon fiber technique. They will send it to you for free and if you've only experienced the consumer 3d printer technology (which is basically a glue gun controlled by a computer) then it's worth doing.

The newer 3d printers can create basically anything. Guns are the obvious thing (and are mostly made of plastic anyways), but any kind of machined parts are clearly next. What does that mean for trademarks and copyrights, which are themselves a complex world-spanning control regime? Are we about to get "Napstered" in physical goods? Or rather, not "if" but "how soon" on that. Did it already happen and we didn't notice?

If we're going to look at failures, then missile control is an obvious one. This podcast (click here now!) from Arms Control Wonk (which is a GREAT policy podcast) demonstrates a few things towards the end:

- How reluctant people are to THINK about control regime failure

- How broken the missile control regimes are in some very complex and interesting ways. When inertial controls become good enough and cheap enough that missiles that previously were only good for nuclear warheads become "artillery" basically, for example.

Acknowledging that export control needs to change fundamentally is going to be a big step. And if I had my way (who knows, I might!) we would build in sunsets to most export control, and have a timeline of around one to five years for most of these controls, and just control a ton less categories and maybe make them work with the rest of our policy.

AI as a case example

Take a quick gander at these graphics or click this link to see directly - dotted red line is "as good as a human":

|

| The basic sum of this story is anything you can teach a human to recognize, you can do better in the cloud and then eventually on your phone. |

The Immunity analysis has always been that there are about five computers in the world, and they all have names. Google, Alexa, Cortana, Siri, Baidu, etc. Our export control regimes are still trying to control the speed of CPUs, which is insane since Moore's law is dead and every chip company is working real hard on heat control now and chips don't even run at one speed anymore and if you ran all the parts in your laptop's CPU at top speed it would melt like cheese on a Philly sandwich.

But there are no computers by our definition in Europe or Russia - they are the third world in the information age. Which at least in the case of Europe is not something they are used to or enjoy thinking about.

|

| Read it and weep. |

Looking at (and emulating) China's plan may be a good first step. But what are they really doing?

- Funding scientific research in the area of Deep Learning

- Funding companies (big and small) doing operational experiments in AI

- Creating an AI National Lab

- Making it impossible for foreign AI companies to compete in China (aka, Google)

- "Rapidly Gathering Foreign AI talent" and encouraging foreign companies to put research centers in China

- Analyzing how Government policies need to change in order to accept AI. MAKING SOCIETY FIT TECHNOLOGY instead of MAKING TECHNOLOGY FIT SOCIETY. Such an under-looked and important part of the Chinese Government's genius on this kind of issue.

- Probably a whole ton of really covert stuff!

Right now, the policy arms of the United States are still wrapped up in Encryption and backdoors on phones and "Going Dark" and the Europeans are in an even sillier space, trying to ban "intrusion software". These debates are colossally stupid. It's arguing over the temperature of the tea served on board an old wooden sailboat while next door the dry dock is putting together an Aegis Cruiser!

AI is the whole pie. It's what game changing looks like when your imagination allows itself to believe in game changing events. It's as life altering to a nation-state as pregnancy is to a marriage. We can't afford to fail, as a society, but we may have to throw out everything we know about control regimes to succeed.

---

More: