|

| This is a non-trivial part of being in offense or high level defense. |

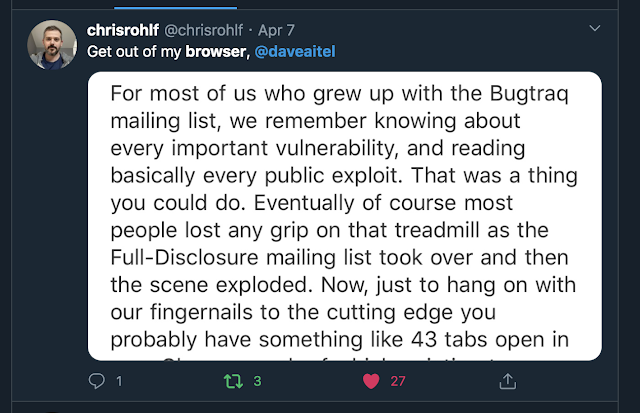

I recently wrote on the technical mailing list DD about the vulnerability treadmill, which essentially is the huge workload taken upon every technical person in the industry to keep up with vast amount of exploit information that is released daily. This firehose of information is distinct from the databases set up by various agencies which are used as lexicons (CVD/CVE/etc.) so various products can in theory talk together over XML pipes.

When talking to policy groups I like to compare any offensive researcher's lifestyle as one where they spend a few hours a day reading every patent that comes out in any particular field. I do this because you often see news articles about how China has exceeded US patents in some area or another based on patent application counts.

But CONTENT IS A LEADING INDICATOR. If any five random Chinese patents are ten times as interesting for a professional to read than any five US patents, then you know what's up without having to do the math on who has more. It is this way with vulnerabilities as well.

One of the things that is distressing to technical experts in this area is the policy focus on "patching". Patching is not nearly as important as people (in particular, as the Cyberspace Solarium's software liability section) make it sound. If you look at two recent vulnerabilities, the Citrix Netscaler and the recent Symantec Web Gateway vulnerability, you don't see "patchable" vulns.

The first thing to see about the Symantec Web Gateway exploit (here) is that it only exists if an upload directory has been created on the device. I'm not sure how common that is. The other thing to note is that the thing appears to be written in PHP, and contain a million other bugs, so I don't really care if this particular bug is realistic or not. It's basically impossible to write secure software in PHP or Perl, which are languages which exist only to prove how hard it can be to write secure software in them.

The Citrix Netscaler exploit sends as similar message of "Your purchasing department failed and no patch is available for that kind of governance mistake".

|

| "The bug here is ... someone installed PERL and decided to use it on their VPN" |

This kind of vulnerability does not exist on equipment when your purchasing department has done their job of due diligence. You don't patch that kind of issue - you rip the equipment out and fire your purchasing manager.

And in fact, banks regularly do this! Josh Corman had a panel on software liability where he discussed a scenario where banks take all the risk and software vendors take none. But this is not true! Banks are extremely tough customers and the majority of Immunity's business for a long time was reviewing the code of various things banks wanted to purchase, BEFORE THEY PURCHASED IT. If we found vulnerabilities that indicated poor code quality, or if the vendor didn't have a process to handle the vulnerabilities we found, they simply didn't buy it.

But what does this bring to a policy discussion? Here are three things you can know from staying on that treadmill:

- Patching is often just a quality signal - it often can't be used as a metric for a lot of very complex reasons

- The Chinese are actually better at cyber than we are right now. We are the "near peer" in cyberspace. I've read all their public exploits and...that's the state of the art. Thinking otherwise is egotism.

- Any norms process is going to have to include a much broader group of countries than just the top three. The Scandanavian countries, South Korea, Japan, and a huge host of "secondary cyber powers" are all far past the point of no return when it comes to capabilities. It is as if we are starting the nuclear norms conversation, but you have to take everyone's views into account including Uzbekistan and GreenPeace. This may color your projections on how realistic these norms discussions are.

No comments:

Post a Comment